Guaranteed stable sliding Goertzel implementation

Is it possible that a Chamberlin state variable implementation of the Sliding Goertzel (SG) resonator provides a stable implementation without introducing a dampening factor?

One problem with using a dampening factor in a given implementation is, how to calculate the lowest possible dampening factor that ensures stability?

I am looking for a low complexity guaranteed stable single bin sliding DFT implementation. In the article "Single Bin Sliding Discrete Fourier Transform" more guaranteed stable implementations of SDFT and SG are described. Most stable implementations require using a dampening factor to move the poles inside the unit circle. The mSDFT provides a guaranteed stable implementation that does not require a dampening factor but requires complex multiplication and addition.

I started out implemented the sliding Goertzel algorithm using IIR direct form 2 for the resonator. For sliding Goertzel, the poles are always on the unit circle and the filter is marginally stable. Even when using double precision floating point, the output slowly drifts. The problem is a limited precision accumulator. One solution to this problem is to move the poles inside the unit circle by using a dampening factor. In that case, I would have to figure out how much the poles had to be moved for the direct form 2 realization to become stable. Of course, I could always degrade performance and just move the poles longer than needed to be on the safe side but it would be a lot better to know the exact requirement. Any hints would be appreciated.

Then the sliding Goertzel was implemented with the resonator in state variable form, like described in the "The digital state variable filter" online article and the book by Chamberlin. When the resonator is realized using the state variable structure, the bandpass output is used and q is zero (infinite Q), making it similar to a sine oscillator. The resonator seems to be stable with both single and double precision floating point. However, I do not know if it really is stable or I just have not tested with an input that provokes the instability. I think my colleague has tested it with fixed point and in that case it was not stable (I will ask again or try it). Maybe, as the resonator contains non-integer coefficients, the implementation cannot be guaranteed stable. If I could prove that the output is guaranteed stable using floating point, I can keep Q at infinity. Otherwise I could find a suitable Q that guarantees stability for a given precision by the cost of extra multiplications. Again, I would prefer knowing the exact Q value that ensures stability instead of guessing.

I have not considered floating point denormals and how it affects performance but my main concern right now is about the stability issue.

References:

"The Sliding DFT", IEEE Signal Processing Magazine, Mar. 2003

"An update to the sliding DFT", Article in IEEE Signal Processing Magazine, February 2004

"Correcting an Important Goertzel Filter Misconception", www.dsprelated.com

"Understanding and Implementing the Sliding DFT", www.dsprelated.com

"Single Bin Sliding Discrete Fourier Transform", 2016, Carlos Martin Orallo and Ignacio Carugati

"Musical applications of microprocessors", Hal Chamberlin

"The digital state variable filter", https://www.earlevel.com

Best,

Kim

Any zero-damping (infinite Q) resonator is metastable by definition. Any noise that gets in will cause a response that persists forever.

Any finite-precision operation that multiplies a quantity by a number less than one and feeds it back on itself has, by definition, quantization effects. These quantization effects are, effectively, noise.

So, you can relax -- no matter what you do, if it's a Goertzel, you're out of luck.

But look at this in context: the Goertzel algorithm was developed at a time when memory and processor time was comparatively far more expensive than engineering hours. It's a really nice, solid, clever trick for dealing with a specific problem in a world where you can't fit a billion transistors on your thumbnail.

But I just checked: we do live in a world where you can fit a billion transistors on your thumbnail, at least if your thumbnails are as big as mine: (https://www.anandtech.com/show/7003/the-haswell-review-intel-core-i74770k-i54560k-tested/5). So make a perpetual trophy for the Goertzel algorithm, and retire it.

Instead, use up a few transistors to make a sine table in read-only memory. Use up a few more to write some code. Then keep some busy by implementing an I/Q demodulation step followed by a moving average filter. If you're concerned about overflow, turn everything into fixed-point and use the CIC trick of an accumulator that's guaranteed to roll over no more than once in an averaging interval, so that subtracting past values from current values all come out correctly. That way the metastability of a baseband moving average filter will happen, but will be canceled out by a pair of complementary nonlinear actions.

signal --> (I/Q demod) --> complex baseband signal --> moving average

Can you elaborate on your preferred approach to the "I/Q" demodulation step" you refer to? I'm looking for more elegant ways to perform that step than what I've done in the past. Similar to your description, I typically mix the input with a complex LO generated from a sine table, which is simple enough. But calculating the complex magnitude (AM demodulation) is annoying. Root-sum-square is harmonic free but computationally expensive, and octagonal approximation (next best thing I've found) is barely adequate, from a harmonic perspective, depending on the task. What is your preferred approach?

Thanks!

I doubt I'll be much help -- I lean more toward brute-force approaches (i.e. just make a sine table, and index into it).

And, for the AM demodulation, again, same boat,

true, for magnitude of complex signal root square is expensive. I have used cordic before and it is cheap but suffers latency.

Thank you for the inputs.

As I wrote, it was also my thought that non-integer coefficients would lead to instability. However, in the "Musical applications of microprocessors" book page 491 Hal writes:

"Actually, the roundoff errors in integer and some floating-point computer arithmetic cancel out each cycle so that there is no net accumulation of error"

Is it possible that the roundoff errors really cancel out and has it something to do with the coefficients being +/-f (identical)?

Which lead me to the original question - does this feature still hold when used as a bandpass filter with infinite Q?

Why use the scheme

signal --> (I/Q demod) --> complex baseband signal --> moving average

instead of the mSDFT implementation?

For my applications, the output only needs to be calculated once per block of samples.

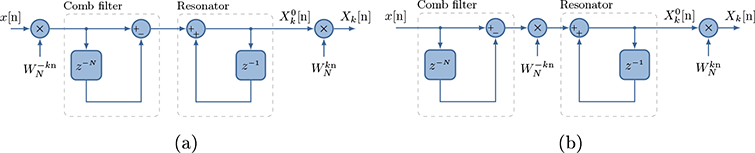

As a backup plan, I was thinking to use the figure 3b mSDFT realization from "Single Bin Sliding Discrete Fourier Transform". The exp(-j*2pi*k*n/N) values could be stored in a lookup table. I only have to perform the final complex multiplication when I need the output. In cases where I only need the output magnitude, the final complex multiplication is unneeded.

Best,

Kim

"For my applications, the output only needs to be calculated once per block of samples."

Then you don't need a sliding anything!

The "sliding" in sliding Goertzel or moving (sliding) average or sliding DFT means that the result is computed sample by sample rather than as a batch per block.

So just do a normal Goertzel at the end of each block, then set its state to zero, then repeat. If latency is an issue, do a normal Goertzel with an update each sample (which means the states will be building up), use the output, then zero the states, then proceed.

I want a sliding algorithm as it is not necessarily each block but could be any time I want the output. Also, the length N will be much greater than the block size, which could also vary. I wrote the note about each block to make it clear that I do not have to calculate the output each sample and therefore that part does not have to be cheap.

You may consider direct DFT as follows:

multiply input by exp(-j*2pi*k*n/N) , then accumulate to end of a chosen frame size.

Then to make it sliding, ideally you need to subtract first input to accumulator (from multiplier result) and allow new input but this could be unrealistic delay pipe. So I suggest subtracting mean of previous frame from accumulator and let it continue, this is to be done from end of first frame to protect accumulator from overflow. The mean is just accumulator result divided by frame size.

Hi kaz.

I might be wrong but what you are describing seems like the basic SDFT. Then you suggest substracting mean of the previous frame from the accumulator.

Does that really make it guaranteed stable?

Also, will that procedure not produce an output that is modulated by the frame size (or create some sort of discontinuity each frame)?

Would the division make it more expensive than for instance using the mSDFT?

Best,

Kim

For an ideal sliding DFT you need a delay pipe to remove first sample of a frame as new sample enters for next frame.

What I see as a problem here is this long delay pipe that requires a lot of memory (unless frame size is small).

So I am suggesting an alternative by assuming every sample is close enough to mean.

Thus I will add a subtractor to the accum input and the value to be subtracted is zero at first frame then is updated at every frame end while subtraction continues per sample into accum.

As such I don’t expect accum overflow since we remove dc build up but update is indeed at end of frame and may be a cause for concern..

Division itself to get mean can be done by discarding lsbs even if frame size is not power of 2 as exact value of mean is not critical

It might be worth modelling it first.

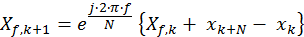

We are talking about the SDFT which can be realized as:

For me, the goal is obtaining the exact same output as using a SDFT but without stability issues.

I have accepted the long delay pipe when using for instance the 3b sMDFT as shown above, as only one delay line is needed even though multiple bins are calculated.

My understanding is that the issue is not accumulator overflow (overflow: a variable is larger than the maximum possible value for that variable). The accumulator value slowly drifts because of its finite precision causing rounding noise to be added whenever there is a non-integer feedback coefficient, unless some trick can make the rounding noise cancel out.

My suggestion was not based on your diagram above. Instead it is based on sheer accumulation of the product of input with exponential...at a given bin, there is no truncation or rounding.You will need one delay line per bin so very expensive.

Hi KimT.

Here are a few papers that discuss ways to implement stable sliding DFTs:

Denis A. Gudovskiy and Lichung Chu, "An Accurate and Stable Sliding DFT Computed by a Modified CIC Filter", IEEE Signal Processing Magazine, Jan., 2017.

Zsolt Kollár, Ferenc Plesznik, and Simon Trumpf , "Observer-Based Recursive Sliding Discrete Fourier Transform", IEEE Signal Processing Magazine, Nov., 2018.

C.-S. Park, "Fast, accurate, and guaranteed stable sliding discrete Fourier transform", IEEE Signal Processing Magazine, July, 2015.

K. Duda, "Accurate, guaranteed stable, sliding discrete Fourier transform", IEEE Signal Processing Magazine, Oct., 2010.Hi Rick.

Thank you for the list of papers with stable realizations. I have briefly looked at all the methods and decided to stick to the mSDFT.

I will use that for applications where longterm stability is a must and maybe use the state variable based Goertzel implementation for less critical tasks.

The mSDFT seems simple, relatively efficient and furthermore is best implemented using fixed point which is a good match for my needs.

Best,

Kim

Hi Rick,

I wonder how this is even allowed to be a 'paper':

Denis A. Gudovskiy and Lichung Chu, "An Accurate and Stable Sliding DFT Computed by a Modified CIC Filter", IEEE Signal Processing Magazine, Jan., 2017.

There is nothing new here. You do the IQ multiplies, and then use a CIC filter as decimator. It's what everybody does...

Hi gretzteam. That "An Accurate and Stable Sliding DFT Computed by a Modified CIC Filter" article was printed in the magazine's 'DSP Tips & Tricks' column. When I have the time I'll read that article to see what "trick" the authors are presenting in that article.